(2019-Feb-06) Working with Azure Data Factory (ADF) enables me to build and monitor my Extract Transform Load (ETL) workflows in Azure. My ADF pipelines is a cloud version of previously used ETL projects in SQL Server SSIS.

And prior to this point, all my sample ADF pipelines were developed in so-called "Live Data Factory Mode" using my personal workspace, i.e. all changes had to be published in order to be saved. This hasn't been the best practice from my side, and I needed to start using a source control tool to preserve and version my development code.

Back in August of 2018, Microsoft introduced GitHub integration for Azure Data Factory objects - https://azure.microsoft.com/en-us/blog/azure-data-factory-visual-tools-now-supports-github-integration/. Which was a great improvement from a team development perspective.

So now is the day to put all my ADF pipeline samples to my personal GitHub repository.

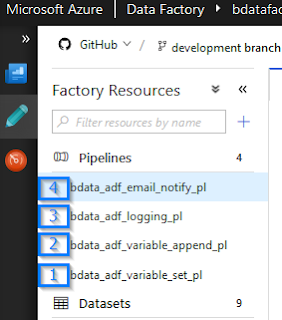

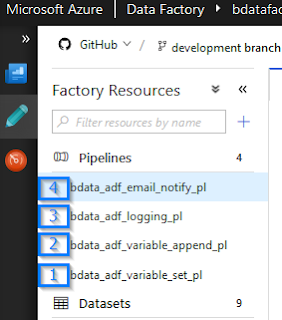

Each of my previous blog posts:

1) Setting Variables in Azure Data Factory Pipelines

2) Append Variable activity in Azure Data Factory: Story of combining things together

3) System Variables in Azure Data Factory: Your Everyday Toolbox

4) Email Notifications in Azure Data Factory: Failure is not an option

Has a corresponding pipeline created in my Azure Data Factory:

And all of them are now publically available in this GitHub repository:

https://github.com/NrgFly/Azure-DataFactory

Let me show you how I did this using my personal GitHub account; you can do this with enterprise GitHub accounts as well.

Step 1: Set up Code Repository

A) Open your existing Azure Data Factory and select the "Set up Code Repository" option from the top left "Data Factory" menu:

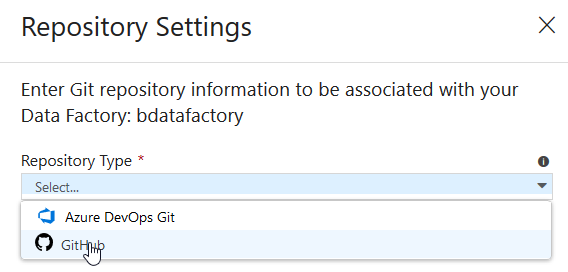

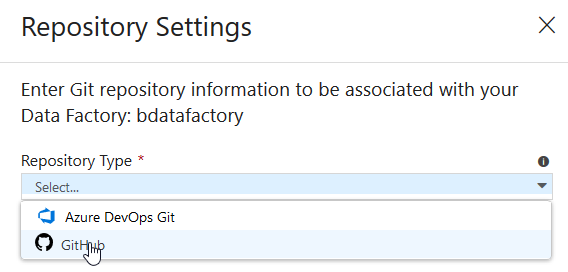

B) then choose "GitHub" as your Repository Type:

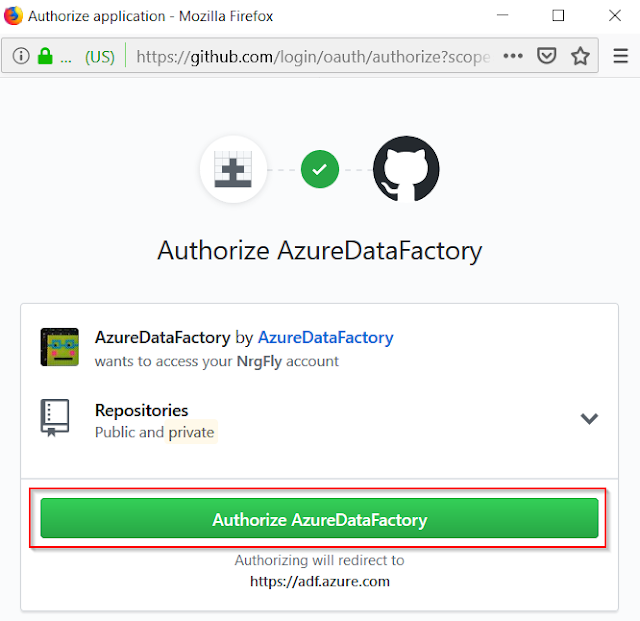

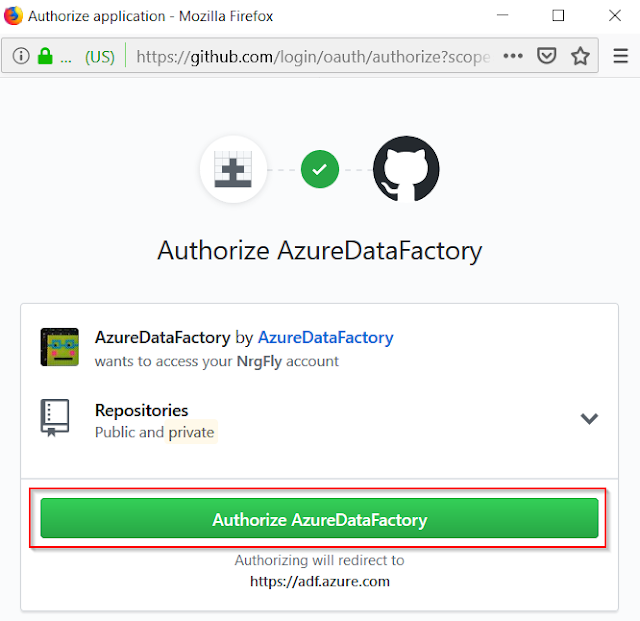

C) and make sure you authenticate your GitHub repository with the Azure Data Factory itself:

Step 2: Saving your content to GitHub

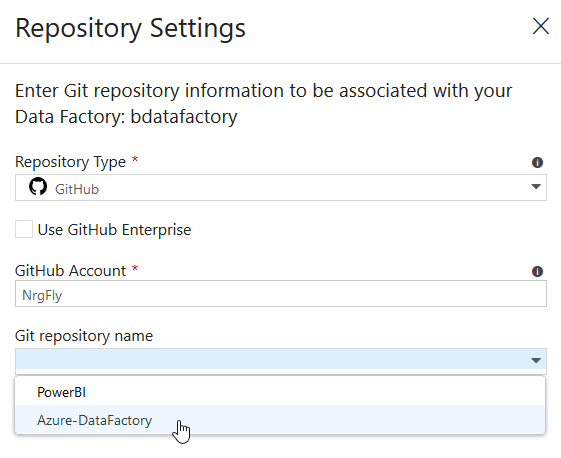

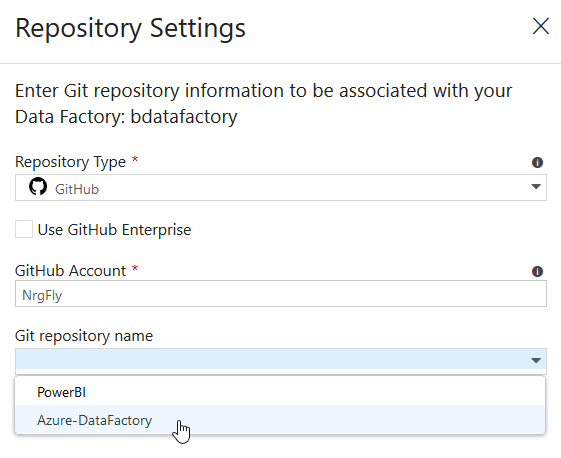

After selecting an appropriate GitHub code repository for your ADF artifacts and pressing Save button:

You can validate them all in the GitHub itself. Source code integration allowed me to save all my AFD artifacts: pipelines, datasets, linked services, and triggers.

And that's where I can see all my four ADF pipelines:

Step 3: Testing your further changes in ADF pipelines

Knowing, that all my ADF objects are now stored in GitHub, let's see if a code change from Azure Data Factory will be synchronized there.

I add a new description to my pipeline with Email notifications:

And prior to this point, all my sample ADF pipelines were developed in so-called "Live Data Factory Mode" using my personal workspace, i.e. all changes had to be published in order to be saved. This hasn't been the best practice from my side, and I needed to start using a source control tool to preserve and version my development code.

Back in August of 2018, Microsoft introduced GitHub integration for Azure Data Factory objects - https://azure.microsoft.com/en-us/blog/azure-data-factory-visual-tools-now-supports-github-integration/. Which was a great improvement from a team development perspective.

So now is the day to put all my ADF pipeline samples to my personal GitHub repository.

Each of my previous blog posts:

1) Setting Variables in Azure Data Factory Pipelines

2) Append Variable activity in Azure Data Factory: Story of combining things together

3) System Variables in Azure Data Factory: Your Everyday Toolbox

4) Email Notifications in Azure Data Factory: Failure is not an option

Has a corresponding pipeline created in my Azure Data Factory:

And all of them are now publically available in this GitHub repository:

https://github.com/NrgFly/Azure-DataFactory

Let me show you how I did this using my personal GitHub account; you can do this with enterprise GitHub accounts as well.

Step 1: Set up Code Repository

A) Open your existing Azure Data Factory and select the "Set up Code Repository" option from the top left "Data Factory" menu:

B) then choose "GitHub" as your Repository Type:

C) and make sure you authenticate your GitHub repository with the Azure Data Factory itself:

Step 2: Saving your content to GitHub

After selecting an appropriate GitHub code repository for your ADF artifacts and pressing Save button:

You can validate them all in the GitHub itself. Source code integration allowed me to save all my AFD artifacts: pipelines, datasets, linked services, and triggers.

And that's where I can see all my four ADF pipelines:

Step 3: Testing your further changes in ADF pipelines

Knowing, that all my ADF objects are now stored in GitHub, let's see if a code change from Azure Data Factory will be synchronized there.

I add a new description to my pipeline with Email notifications:

The problem with using GIT option in ADF is that

ReplyDelete1- You can not cherry pick your pipeline deployment, For example if you have 10 Pipeline in a ADF and you just want to deploy one of them you can't

2- changing a CONNECTION object is not easy and in some cases not possible, for example a developer develops a ADF with 10 pipelines, the connection object are NOT using Azure KeyVault (AKV) and you want to change the connection objects to use AKV

3- and lots more

To solve the issue what i have done is that i have created my ADF in the portal them extracted the JSON code to my computer, then i have created in Visual studio ....

1- One solution per ADF

2- Multiple project as mentioned...

3- prjMasterPipeline

4- prjGlobalPipeline

5- prjPipeline01

6- prjPipeline02

.

.

15- prjPipeline10

Now i can

-cherry pick my deployment

-change the connection objects how i like or how the DevOps wants me

-have everything in VS so i can have them in Azure Devops or GIT

- and lots more

Good luck

Nik - Shahriar Nikkhah

Thanks for your comment, Nik, you have some valid point.

ReplyDeleteOn a positive side:

1) You can treat your Data Factory as a complete Data Transformation project/solution to be further synced in GitHub

2) You can always change non-AKV based data connectors to AKV and commit that change to a source control; along with that a practice can be established to always start creating linked services to data connector based on AKV

3) ADF GitHub integration (or other Git tools) is a great step toward continuous integration and delivery using DevOps. I've just blogged about it: http://datanrg.blogspot.com/2019/02/continuous-integration-and-delivery.html