(2018-Oct-15) Working with Azure Data Factory you always tend to compare its functionality with well established ETL packages in SSIS. Data flow task have been recreated as Data Copy activities; logical components have found they cloud-based siblings; as well as new kids on the block, such as Databricks and Machine Learning activities could boost adoption rate of Azure Data Factory (ADF) pipelines.

Support for local variables hasn't always been available in ADF and was only recently introduced to already available pipeline parameters. This addition makes more flexible to create interim properties (variables) that you can adjust multiple times within a workflow of your pipeline.

Here is my case-study to test this functionality.

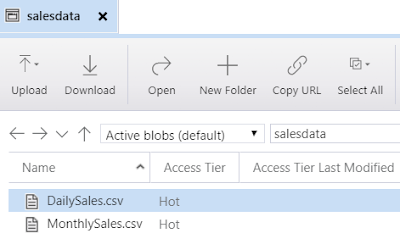

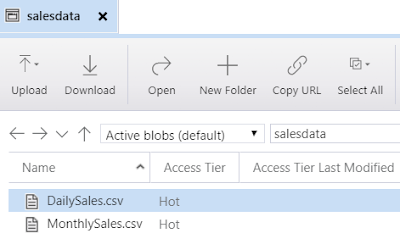

I have a simple SQL Database with 2 tables that could hold daily and monthly sales data which I plan to load from a sample set of CSV data files from my Blob storage in Azure.

My new ADF pipeline has an event trigger that passes a file path and file name values from newly created objects in my Blob storage container:

The logic then would be to check a data feed type (Daily or Monthly) based on a file name and load data to the corresponding table in SQL Database in Azure.

And here is where this [Set Variable] activity comes as a very handy tool to store a value based on a define expression of my variable:

Then I define two sub-tasks to copy data from those flat files into corresponding tables based on the value of the FeedType variable:

And once this all being done, I place two different data files into by Blob storage container and ADF Pipeline trigger successfully executes the same pipeline twice to load data into two separate tables:

Which I can further check and validate in my Azure SQL Database:

My [Set Variable] activity has been tested successfully!

And it's one more point toward using ADF pipelines more often.

Support for local variables hasn't always been available in ADF and was only recently introduced to already available pipeline parameters. This addition makes more flexible to create interim properties (variables) that you can adjust multiple times within a workflow of your pipeline.

Here is my case-study to test this functionality.

I have a simple SQL Database with 2 tables that could hold daily and monthly sales data which I plan to load from a sample set of CSV data files from my Blob storage in Azure.

My new ADF pipeline has an event trigger that passes a file path and file name values from newly created objects in my Blob storage container:

The logic then would be to check a data feed type (Daily or Monthly) based on a file name and load data to the corresponding table in SQL Database in Azure.

And here is where this [Set Variable] activity comes as a very handy tool to store a value based on a define expression of my variable:

Then I define two sub-tasks to copy data from those flat files into corresponding tables based on the value of the FeedType variable:

And once this all being done, I place two different data files into by Blob storage container and ADF Pipeline trigger successfully executes the same pipeline twice to load data into two separate tables:

Which I can further check and validate in my Azure SQL Database:

My [Set Variable] activity has been tested successfully!

And it's one more point toward using ADF pipelines more often.

What condition you have written in the true activity based on the variable value

ReplyDeleteI am mean how would we access the variable value if we have array type variable

Yes, you can assign your array type variable to a For Each container and then iterate though each of the array values using item() as reference point for individual values. I've blogged about this as well: https://datanrg.blogspot.com/2018/10/append-variable-activity-in-azure-data.html

DeleteI am already inside For each container and I am setting this variable to the list of error files created during the run of copy data activity which is iterative activity for list of objects. I have assigned the values to my array variable named as FileList as below

Delete@activity('CheckforSFerrorfile').output.childitems

where CheckforSFerrorfile is GetMetadata activity

Now in next hop I want to check for first value of this array the Type of Item returned by getmetadata Activity i.e either 'File' or 'Folder' and based on the value I want to do operation in If COndition Activity.

My expression for Ifcondition activity is as below

@contains(variables('FileList'),'Folder')

Please help me

Hi Gaurav, to get a first element from your array variable is easy. You don't need to process it through a loop container.

ReplyDeleteThis expression will give you the first element of your array: variables('FileList')[0].

I've created an example in posted in my personal GitHub: https://github.com/NrgFly/Azure-DataFactory/blob/master/Samples/pipeline/bdata_adf_variable_array_first_element.json

First, I manually create an array variable FileList := @createArray('File', 'Folder')

And then I set another variable to the first element of the array - FirstArrayElement := @variables('FileList')[0]

And then you can do what ever you want with the FirstArrayElement variable.

I'm thinking to write a quick blog post about it, thanks for your idea :-)

Blog post created:

Deletehttp://datanrg.blogspot.com/2019/04/azure-data-factory-extracting-array.html

Hi Rayis,

ReplyDeleteWe have a specific use case somewhat similar to your above two blogs, in which we have a delimited file which contains two columns ID and Data, For ex: ID = 1 and Data = A.

Our requirement is to generate text files out of it using Data Factory, the name of the output file should be same as that of ID column (we may achieve this using variables) and inside those files corresponding data should be written. We don't need any column headers to be included inside the files, just that data text to be written. And we need to upload these files to Azure BLOB.

We are stuck with this requirement past 2 days. It would be great help to us, if you can suggest us with some pipeline flow, in regards to above requirement.

1) LookUp activity to read your file (ID, Data)

Delete2) Output ID into array variable

3) ForEach container using the array variable

4) Within this container use a CopyData or any other File creation tasks to create your files (create Folder/Filename) parameters for your sink dataset) and then pass your ID file into those sink dataset parameters).

Logic may vary, but at least you can get an idea how to process your files.

Instead of variable we can use parameter as well. Can you please let know the difference between the parameter and variable inside Data Factory.

DeleteSorry for the late response. There is a lot of similarities between variables and parameters. One of the differences is that you can reference parameters externally when you execute you ADF pipeline, and variable are only visible within a particular pipeline.

DeleteSo, exactly how do you pass dataset values into data sink parameters? I cannot find a way to do this.

DeleteSo, exactly how do you pass dataset values into data sink parameters for a custom file name?

DeleteHi Rayis,

ReplyDeleteThanks for your great help !!

I achieved this as per your suggested flow. But one quick question, As I did some research, LookUp activity of ADF can only process 5000 rows upto 2 MB in size.

Here we have a requirement of processing 1 million rows. I mean we generally process more records in BI projects through SSIS.

Just want to confirm, Is there any functionality through which we can implement the same in ADF ?

Yes, I agree, the Lookup in the Control Flow of the ADF is for small datasets only, it has its own memory limitation.

DeleteFor a larger datasets I would suggest using Lookup/Exists component of the Mapping Data Flows in the ADF, where along with parameterized Sink you can have your destination file naming dynamically.

However I haven't test this route yet, you will be the first one! Tell me how it goes in your case.

Hi Rayis,

ReplyDeleteWondering if this can be done via look up and set variable.

I have 3 variables a,b,c all strings.

The lookup returns 3 rows. the output columns would be value and packagename.

In the set variable, need to check if packagename contains string, if yes, set variable with the value column.

Please advice.

Thank you.

Your lookup activity output comes out as an array so you can reference each rows individually referencing them with []. And you can check either particular values of your packagename or if it has any values, and then you can assign lookup row output into either a,b,c.

DeleteThank you Rayis. Is it possible to iterate the lookup output within the set variable acitivity and check for the condition and set the variable?

DeleteYes, this should be possible as well. You will just need to use a ForEach loop container, pass the output of your Lookup activity to the list of items to the ForEach, and then within your loop container use IF and Set Variable activities wisely.

Deletethank you.

DeleteHello, it is possible to read a flat file (configuration file) and set the values of each register in variables to be used later in another pipeline

ReplyDeleteYes, it is possible, you can read it once and save and process the output of your config file into variable and then pass them into your sub-pipelines.

DeleteHello, it is possible to read a flat file (configuration file) and set the values of each register in variables to be used later in another pipeline

ReplyDeleteYes, it is possible, you can read it once and save and process the output of your config file into variable and then pass them into your sub-pipelines.

DeleteHi Rayis, from the below variable output i was trying select only name . Can you please help me with the expression.

ReplyDeleteVariable activity output-

{

"name": "ColumnNamesArray",

"value": [

{

"name": "Item",

"type": "String"

},

{

"name": "UnitPrice",

"type": "String"

},

{

"name": "CreatedDate",

"type": "String"

},

{

"name": "LastModifiedDate",

"type": "String"

},

{

"name": "NewColumn1",

"type": "String"

},

{

"name": "NewColumn2",

"type": "String"

},

{

"name": "NewColumn3",

"type": "String"

}

]

}

What name are you looking for? The name at the top of your JSON output or names within the actual array?

Delete