(2021-Jun-30) One of my university professors liked to tell us a quote, “The Sleep of Reason Produces Monsters”, in a way to help us, his students, to stay active in our thinking process. I’m not sure if Francisco Goya, had a similar aspiration when he was creating his artwork with the same name.

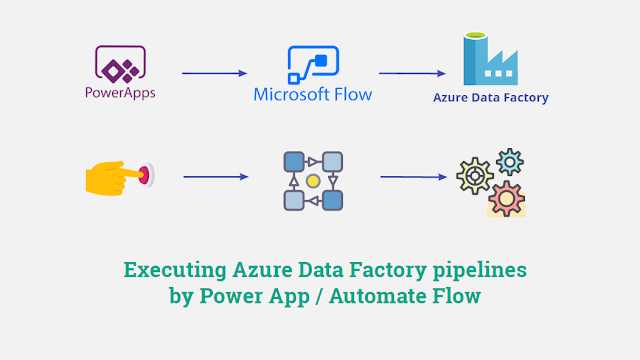

So, let me explain my reasons to create a solution to trigger Azure Data Factory (ADF) pipelines from a Power App and why it shouldn't be considered as a monster :-)

- Schedule trigger: a trigger that invokes a pipeline on a wall-clock schedule.

- Tumbling window trigger: a trigger that operates on a periodic interval, while also retaining state.

- Event-based trigger: a trigger that responds to an event.

- Custom event trigger: a trigger that requires integration with Azure Event Grid (preview)

You can also manually run (execute) your ADF pipelines by using one of the following methods:

- .NET SDK

- Azure PowerShell module

- REST API

- Python SDK

Another option to manually trigger an ADF pipeline is to access the ADF instance itself and visually select a pipeline to run along with passing all necessary parameters. However, this will require elevated permissions, and you wouldn't want to give this access away.

Alternatively, Azure Data Factory exists as a connector in Logic Apps, Power Apps and Power Automate Flows and can support three different actions:

- Cancel a pipeline run: This operation cancels a pipeline run by its run ID.

- Create a pipeline run: This operation creates a new pipeline run in your factory

- Get a pipeline run: Get a particular pipeline run execution

Why Power App & Automate Flow?

As flexible as Azure Data Factory can be, it still doesn’t allow regular business users to start a necessary data transformation process whenever they need to, i.e. outside of scheduled time frames. Our business users just wanted to have “their” magic button to press and wait for the results.

Developing a sophisticated web application for business users with Rest API calls to trigger ADF pipelines is a very time-consuming exercise. Power App in contrast provides an easy way to develop an instrument that business users can use and operate, so this choice is settled.

Then I need to create a logical workflow to execute Data Factory pipelines in Azure, and I intuitively think about Azure Logic App. Yes, this is possible and Power App allows connection to Logic App in Azure, but even if Power Platform and Azure are both Microsoft ecosystems, they exist in two separate worlds. So, Power Automate Flow (which is a sibling to Azure Logic App) is another obvious choice for my development.

Power App development

- Technical Pipeline Name text field defines an explicit name of my Data Factory pipeline that I want to execute. This field value is derived based on user interaction with my Power App.

- Technical Parameters text field defines JSON elements with all the parameter values, and those values are derived from the Power App user controls too. Again, your case with the pipeline name and parameters will be different.

- Magic button that will pass Technical Pipeline Name and Technical Parameters values to my Power Automate Flow which then will execute my ADF process.

eFront_ADF_Pipeline_Execute_Only is the actual name of my Power Automate Flow.

Power Automate Flow development

My Power Automate Flow needed to be as generic as possible. It should only have a reference (connection) to my Data Factory; the pipeline name with its parameters to execute should also come from the incoming payload.

(2) Then I transform "var_power_app_payload" variable into a JSON structure:

(3) Save the 'pipeline_name' JSON element into "var_adf_pipeline_name" variable comes next:

(4) Save the 'parameters' JSON element into "var_adf_pipeline_parameters" variable follows the previous step:

(5) Final, but not the last step is to execute the "Create a pipeline run" step and pass "var_adf_pipeline_name" and "var_adf_pipeline_parameters" variables along with selecting my Azure Subscription, Resource Group and Data Factory:

(6) After submitting a request to trigger an ADF pipeline we then just return a status of the previous step execution back to the Power App.

As a result, our business users can now interact with their Power App and initiate a data transformation process whenever they want to which will then trigger the Power Automate Flow and execute an actual Data Factory pipeline.

Closing Notes

There is a risk that business users may press their “magic” Power App button multiple times and this will then initiate multiple Data Factory pipeline executions that will run simultaneously. Azure Data Factory allows Concurrency setting on a pipeline level, let’s say, one at a time and other requests to execute the same pipeline will be queued.

However, we didn't need this queued process. Imagine seeing a queue of hundreds of pipelines in Data Factory if someone pressed that Power App button a hundred times.

Paul Andrew created a very elegant solution to check if an ADF pipeline is already running, then gracefully stop the new run - https://mrpaulandrew.com/2020/11/12/get-data-factory-to-check-itself-for-a-running-pipeline-via-the-azure-management-api/.

Another, thanks to Kenwyn Warner, who showed me how to interact with Power Automate Flows from a Power App!

You just solved my problem. Very good article. I was trying to find just this solution... A simple low code user interface that would allow me to trigger Data Factory pipeline executions.

ReplyDeleteThank you!

Thanks for reading my blog post! I see a lot hesitation in the data engineering community of using Power Platform tools, but I don't mind. If they help to solve business problems, I am happy to learn how to use them!

DeleteHi Marco

ReplyDeleteGreetings for the Day !,

I am trying to Understand what are the values that you have passed in the Power Automate Flows in Step 2, 3, 4, 5.

Hello, my name is not Marco, but I can still help you. If you haven't read the post, this is what I'm passing

Delete2){

"inputs": {

"variables": [

{

"name": "var_power_app_payload",

"type": "string",

"value": "@{triggerBody()['Initializevariable-varpowerapppayload_Value']}"

}

]

}

}

3) {

"inputs": {

"variables": [

{

"name": "var_adf_pipeline_name",

"type": "string",

"value": "@body('Parse_JSON_-_Extract_ADF_Pipeline_name_and_Parameters')?['pipeline_name']"

}

]

}

}

4) {

"inputs": {

"variables": [

{

"name": "var_adf_pipeline_parameters",

"type": "object",

"value": "@body('Parse_JSON_-_Extract_ADF_Pipeline_name_and_Parameters')?['parameters']"

}

]

}

}

5) "var_adf_pipeline_name" and "var_adf_pipeline_parameters" variables' values

Hey exactly this is what which i am working on and your solution is eye opener to me. But I have few doubts on the flow. Can you share your email so that I can connect with you to get some insights. You can reach me at sriramrbarani@outlook.com

ReplyDeleteEmail sent

Deletehi there , I my datafacotry is getting triggered however i am getting the error "This request is not authorized to perform this operation." Do we need to add some kind of permission for the powerapp to access datafactory. Thanks in advance.

ReplyDeleteWhen you work on your Power Automate Flow, check if your service account that you're going to be using to connect to ADF has sufficient access to trigger pipelines there and manually test this flow first.

DeleteHi

ReplyDeleteWhat does "PA1_Response" stand for ?

It is a response from the Power Automate flow.

DeleteI've created a simple canvas app, with just a single button and two text input boxes to pass the pipeline name and parameters. I have copy pasted the code from above for 'On Select' property. But I'm getting the below error.

Delete"incompatible type. we can't evaluate your formula because the context variable types are incompatible with the types of values in other places in your app."

Please advise.

I'm getting the below error's in the 'On Select' property, when I created the canvas app and copy pasted the code from above.

Delete"incompatible type. We can't evaluate your formula because the context varialble types are incompatible with the types of values in other places in your app.

'Run' is an unknown or unsupported function in namespace 'Power App'

The function 'Concatenate' has some invalid arguments.

name isn't valid. 'PA1_Response' isn't recognised."

Please advise.

It all should go down if your Power Automate can be executed successfully and if it can return Success or Failure repspone. Then the call for the Flow from the your App should return this result into a variable of your choice, it can be 'PA1_Response' or it can be something else. You can read more about this here: https://learn.microsoft.com/en-us/power-platform/power-fx/reference/function-set

DeleteMake sure, the your Automate Flow runs successfully first when you test it manually. If you can run it, then Power App development shouldn't be a bit problem for you.

Thank you.

DeleteI ran the flow manually, but still getting this below error.

Unable to process template language expressions in action 'Initialize_variable_-_var_power_app_payload' inputs at line '0' and column '0': 'The template language expression 'triggerBody()['Initializevariable-varpowerapppayload_Value']' cannot be evaluated because property 'Initializevariable-varpowerapppayload_Value' doesn't exist, available properties are ''. Please see https://aka.ms/logicexpressions for usage details.'.

Good, then fix your flow first, don't copy my flow steps just like that. Build your flow that would trigger your ADF pipeline with no parameters first, make sure the ADF is executed successfully. Then add a parameter to your ADF pipeline but still pass it manually in your Flow. Only then when you 100% sure, that your ADF pipeline with expected hard-coded parameter value can execute via your flow, make more flexible and pass the value of the flow payload variable from your power app. It's a gradual and painful process, but when you finish it your will be happy to complete it.

DeleteThank you. I will work on my flow.

DeleteGood, keep me posted on your progress.

ReplyDeleteI ran my flow manually and got stuck with an issue which I can't seem to resolve.

DeleteActually I have created my pipeline in Synapse instead of Data Factory. When I pass the pipeline name and the Synapse workspace name for Data Factory field in the create a pipeline run, i'm getting the below error.

"The Resource 'Microsoft.DataFactory/factories/Synapseworkspacename' under resource group 'rg-name' was not found. For more details please go to https://aka.ms/ARMResourceNotFoundFix"

In Azure portal, the Synapse workspace is under the resource group I have created. And for some reason, its not getting picked up in the Power Automate data flow.

Check with Microsoft Support if connection to a Synapse workspace based pipeline can be accessed in Power Automate. This is the current info on ADF pipeline connector: https://learn.microsoft.com/en-us/connectors/azuredatafactory/

DeleteHello, To develop the job which trigger the ADf pipeline using PowerApp do we need Service account? if yes how we provide access to service account at subscription level

ReplyDeleteWhen you develop your solution: ADF, Power App, Power Automate. You can use your own AAD account. Then once you finish all the initial testing, you can create a dedicated AAD account that would have access to ADF via RBAC and then switch all the existing connections in Power App from your personal AAD account to the new AAD account that you create.

DeleteAny ideas on how to get the success status of a triggered pipeline

ReplyDeletePower Automate doesn't wait the finish of the ADF pipeline execution it triggers. You can poll the status if you want to, but this will have to be built separately.

Deletevoce conseguiu me ajudar bastante, so tenho a duvida de qual codigo esta naquela validação de true e false que voce ensina no video

ReplyDeleteGreat Post! Thanks for sharing with us!

ReplyDelete