(2019-Apr-28) Full credit goes to Microsoft for the latest efforts updating Azure products with new features and documenting corresponding changes for the end users. Azure Data Factory (ADF) is a great example of this.

A user recently asked me a question on my previous blog post (Setting Variables in Azure Data Factory Pipelines) about possibility extracting the first element of a variable if this variable is set of elements (array).

So as a spoiler alert, before writing a blog post and adding a bit more clarity to the existing Microsoft ADF documentation, here is a quick answer to this question. The first element of an ArrayVariable is extracted by this expression: @variables('ArrayVariable')[0].

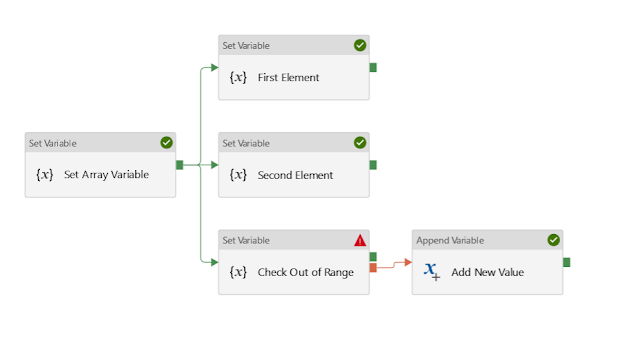

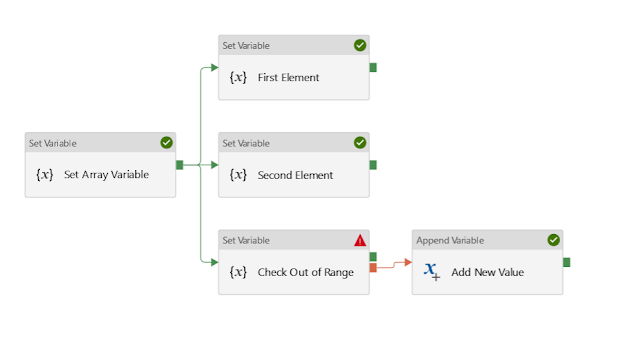

I created a simple ADF pipeline to test this out:

And here the list of variables that I plan to use in this testing ADF pipeline:

1) I Set my ArrayVariable to two characters "A" & "B": @createArray('A', 'B')

2) Then I extract the first element of this array into ArrayElement_1:=@variables('ArrayVariable')[0]

3) Second element of this array is extracted into ArrayElement_2:=@variables('ArrayVariable')[1]

4) Also, I check an out-of-range behavior of the ArrayVariable by setting ArrayElement_OutOfRange: =@variables('ArrayVariable')[2]

Which gives me the following error message and confirms that I can't make a reference to an element in my array variable that is outside of its boundaries.

Summary:

The use-case of referencing array elements by using square brackets is quite broad. And now it will be easier to use another activity output, like child items of GetMetaData activity task. You either can scan this list of values using ForEachLoop container or in case if you know the exact position of your array elements, then you can just extract those values with square brackets referencing.

Code of this blog post ADF pipeline can be accessed in my personal GitHub repository:

https://github.com/NrgFly/Azure-DataFactory/blob/master/Samples/pipeline/bdata_adf_variable_array_many_elements.json

Happy data adventures!

A user recently asked me a question on my previous blog post (Setting Variables in Azure Data Factory Pipelines) about possibility extracting the first element of a variable if this variable is set of elements (array).

So as a spoiler alert, before writing a blog post and adding a bit more clarity to the existing Microsoft ADF documentation, here is a quick answer to this question. The first element of an ArrayVariable is extracted by this expression: @variables('ArrayVariable')[0].

I created a simple ADF pipeline to test this out:

And here the list of variables that I plan to use in this testing ADF pipeline:

1) I Set my ArrayVariable to two characters "A" & "B": @createArray('A', 'B')

2) Then I extract the first element of this array into ArrayElement_1:=@variables('ArrayVariable')[0]

3) Second element of this array is extracted into ArrayElement_2:=@variables('ArrayVariable')[1]

4) Also, I check an out-of-range behavior of the ArrayVariable by setting ArrayElement_OutOfRange: =@variables('ArrayVariable')[2]

Which gives me the following error message and confirms that I can't make a reference to an element in my array variable that is outside of its boundaries.

Summary:

The use-case of referencing array elements by using square brackets is quite broad. And now it will be easier to use another activity output, like child items of GetMetaData activity task. You either can scan this list of values using ForEachLoop container or in case if you know the exact position of your array elements, then you can just extract those values with square brackets referencing.

Code of this blog post ADF pipeline can be accessed in my personal GitHub repository:

https://github.com/NrgFly/Azure-DataFactory/blob/master/Samples/pipeline/bdata_adf_variable_array_many_elements.json

Happy data adventures!

This would be the same if you where using a Logic App.

ReplyDeleteBut this does help to use more Azure Data Factory resources internally.

Deletehow should we add elements with special characters in array

ReplyDeleteTry to use backslash ( "\" ) character is a special escape character.

Delete

ReplyDeleteit doesn't work for me, I get that error. :( "The expression 'variables('ArrayVariable')[2]' cannot be evaluated because array index '2' is outside bounds (0, 0) of array.",

Seems like that your array variable hasn't been populated yet. Also, try to test lower array elements ID.

DeleteThis is my problem:

ReplyDeletehttps://stackoverflow.com/questions/62182125/azure-datafactory-set-variabe-array-and-for-each-exe-pipelines-activities

can you give me another solution?

Can you share you code repository for this ADF pipeline? Also can you explain in more details what you're trying to achieve? Screenshots don't provide sufficient information.

DeleteI am also facing similar issue in Azure logicApp, I am getting error "Array index '3' is outside of array Bounds (0,2) . I am trying to extract message from Teams and create excel Row .

ReplyDeleteMessage are coming in multiple facts as "Name" "value" pair .

Example:

"Facts":

[ "Name": "A"

"Value": "B"

]

"Facts":

[ "Name": "D"

"Value": "E"

]

Check if you can read/assign elements from your array, based on your error message you can only reference them [0], [1], and [3].

DeleteHi Rayis,

ReplyDeleteI have to break down an array property such intervalcounts [0,0,1,0,1-->0] 48 times to single intervalcount of 1 to 48. Will above method help to get this sort of output. I have tried multiple methods but no resolution.

Hi Harry, regarding your question, "Will above method help to get this sort of output.". Could you be more specific on what "this sort of output" you're expecting to get?

DeleteThanks for your reply first as you are fast to reply and happy to help rather than other bloggers. Ok, the issue is that we normally get JSON Data where interval count comes like this :-"intervalCount": 48,

Delete"intervals": [ 100, 1000, 0, 0, 100 ,0,0,100,100, 1000, 0, 0, 100 ,0,0,100,100, 1000, 0, 0, 100 ,0,0,100,100, 1000, 0, 0, 100 ,0,0,100,100, 1000, 0, 0, 100 ,0,0,100,100, 1000, 0, 0, 100 ,0,0,100]

What I want to achieve is to breakdown above array to single interval count of 30 mins and store it in a tabular format using DF.

Hi Harry, so you want to transpose your array of 48 elements into one table column with 48 rows? Is that the idea?

DeleteYes that is right Rayis, I will be grateful if I can get some help on this.

DeleteHi Rayis, I have used your another article (http://datanrg.blogspot.com/2020/03/transforming-json-to-csv-with-help-of.html)to sort out one bit but not able to flatten it with property values like Intervals[1], intervals[2] and so on. Also I need to add another static mapping which will have current date and timeintervals such as TimeInterval1 : 2020-11-08T00:00:00, TimeInterval2 : 2020-11-08T00:30:00. I am not able to paste picture here so hard to explain.

DeleteThen if Data Flow flatten activity didn't work for you, you can do a very simple Control Flow approach by using a ForEach loop container data factory where input for this loop would be your "intervals" array, set this interval to sequential. If your backend database is SQL Server or any other relational database engine then your call a store procedure within this ADF loop container to add new row to your table based on the array value @item(). Also, in this loop container you can populate your second column with the TimeInterval value.

DeleteAny expression that I can use to add another rows in the mapping for timings for all 48 values intervals.

DeleteIf you can have a @counter variable from (0..47), then this could turn into a simple math formula within your loop container to turn @Counter*30 minutes into a time stamp to add to your constant date part.

DeleteThanks Rayis, understood that means to increment the variable. Also that means I have to perform join as well or aggregate to add this variable to existing Mapping

DeleteYes, you got this right. Control flows may be one of the ways to incorporate this logic. you can always try to resolve either your mapping data flows, or using external code (databricks notebook, azure functions).

Delete