(2023-Feb-20) The previous posts covered the following areas of Metadata-driven pipelines in Azure Data Factory:

These 3 areas suggested that it is feasible to build a data solution in Azure Data Factory (ADF) where a small set of generic ADF pipelines can support a data load stream of multiple sourcing data feeds with different sets of data columns. This requires fewer efforts to create data load pipelines and more energy to configure the required metadata (i.e feed detailed specification) to support the expected flow of your data. What's next?

Now you have all configured data feeds running independently and in parallel, thanks to the support Azure Data Factory functionality. Let's look at the case of using data from individual feeds to populate analytical data tables (they could also be called data warehouse tables) where one analytical table can depend on one or many sourcing feeds and one sourcing feed can contribute to one or many analytical tables, just like a pebble rock at the top of the pile can't stay there without the support of its foundation.

If you work with a data vault methodology and a trying to design your data warehouse, then a dependency pursuit is only relevant to your observation of the relationships between link, hub and satellite tables. And when new data is loaded into the data vault model, you don’t really care about the order each table receives an update since the relationships are built using hash columns using those tables' business keys.

However when you still need to establish the order of the data load process, i.e. loading Dimension tables first and then Fact tables after in the Kimball methodology, unless you’re not using the “late arriving dimension or early arriving facts” approach, then the dependency framework might be helpful.

What I mean by the dependency framework, is a logic that would tell that one dimension table depends on one or more sourcing feeds, thus those feeds need to be processed first. Then this dimension table may support data transformation in another dimension table and a few other Fact tables, thus those fact tables can’t be processed unless you finish populating data in their supporting children objects.

In this hypothetical example, we have a set of sourcing data feeds that in a straight-through or dependable way contribute to the population of Dimension and Fact data tables. Where Fact_1 table is only sourced from a single feed, but to populate data in the Fact_3 table, you will have to wait until the objects in between will obtain their corresponding updates.

A possible solution to resolve this dependency can be done using several methods (the list is not complete):

- “Engraved” or hard-coded solution to load the data with a fixated path that is only stable and manageable up to the point of the next change that may require significant development efforts to update.

- An alternative way to encapsulate all the dependent objects loading logic and steps into a single routine (script, notebook, batch job, stored procedure, the list may go on); this may seem like an elegant approach on the surface, however, it would still require a well-trained individual to maintain the code and knowledge transfer might not be an easy task too;

- Another option to tackle this dependency could be made through a configuration mechanism and hope that such an organized system can still be consumed by a set of very generic data flows (or data pipelines) with fewer efforts to support it in the long run.

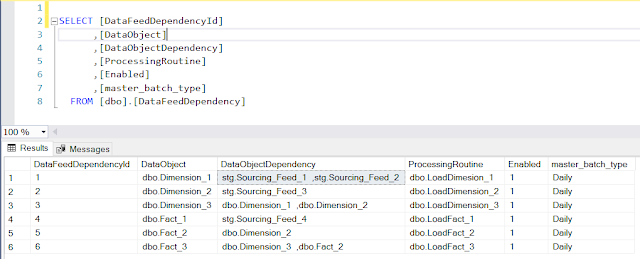

Going back to my example, where those 6 dependencies can be configured in a table format, thus adding more flexibility for future additional dependency-driven feeds: [DataObject] is a parent entity along with the list of its contributing elements stored in the [DaraObjectDependency] column.

So, my sample configuration is done, and that was easy, however, I need to have better visibility for each individual data load step progress. By transposing this condensed dataset I can look into each individual data load process step. A sample SQL code to pivot my dbo.DataFeedDependency table:

CREATE PROCEDURE [dbo].[CreateFeedDependencyExecution] ( @master_batch_id int, @master_batch_ts varchar(100), @master_batch_type varchar(50) ) AS BEGIN SET NOCOUNT ON; BEGIN TRY begin transaction INSERT INTO dbo.DataFeedDependencyExecutionLog ( master_batch_id , master_batch_ts , DataObjectParent , DataObjectChild ) SELECT -- Transpose dbo.DataFeedDependency.DataObjectDependency into multiple rows @master_batch_id , @master_batch_ts , DataObjectParent = dfd.DataObject , DataObjectChild = replace(replace(trim(value), char(10), ''), char(13), '') -- STRING_SPLIT table-valued function result value FROM [dbo].[DataFeedDependency] dfd CROSS APPLY STRING_SPLIT(dfd.DataObjectDependency, ',') -- Transpose String to Column where dfd.Enabled = 1 and dfd.master_batch_type = @master_batch_type UNION SELECT -- Add Dims and Facts as data "feeds" too @master_batch_id , @master_batch_ts , DataObjectParent = dfd.DataObject , DataObjectChild = dfd.DataObject FROM [dbo].[DataFeedDependency] dfd where dfd.Enabled = 1 and dfd.master_batch_type = @master_batch_type -- Stored Procedure output for validation SELECT * FROM dbo.DataFeedDependencyExecutionLog WHERE master_batch_id = @master_batch_id; commit transaction END TRY BEGIN CATCH -- Output few key error statistics in the case of exception or termination rollback transaction; SELECT ERROR_NUMBER() AS ERR_NUMBER, ERROR_STATE() AS ERR_STATE, ERROR_LINE() AS ERR_LINE, ERROR_PROCEDURE() AS ERR_PROCEDURE, ERROR_MESSAGE() AS ERR_MESSAGE; END CATCH END;

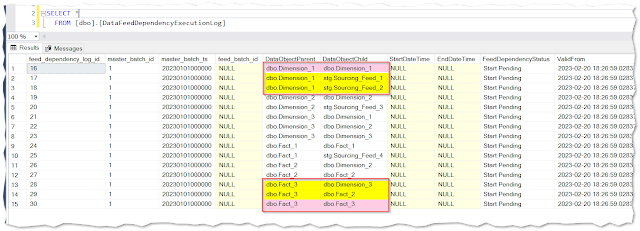

Now for my sample daily batch (master_batch_id =1) all individual data load steps are presented as table rows, thus making a status update process a lot easier.

Example with the dbo.Dimension_1 table object requires the loading of two separate sourcing feeds: stg.Sourcing_Feed_1 & stg.Sourcing_Feed_2. Those two feeds can be configured and independently loaded using a generic set of Data Factory pipelines; such method was described in the 2nd part of this blog series.

Similarly the last piece of our sample puzzle, fact table dbo.Fact_3 will have to wait for the complete and successful processing of its children: dbo.Dimension_3 & dbo.Fact_2 tables.

Then, what’s the whole deal of creating all these table objects, showing an SQL code to transpose dependency configuration table into individual rows? What about the Data Factory pattern, since the data transformation engine is mentioned in the title of the blog post? And what about that line in the pivoted dependency table where the DataObjectParent value equals to DataObjectChild? Let me explain.

For every object that is finished, there has to be a check made: “I’m a child A or a parent B. I’m finished. Have all my parent’s children finished their loading process too? If the answer is No, then this check is complete (other non-finished children will be asked for the same check later). If the answer is Yes, a.k.a. I’m the last processed child of my parent, then all things are ready to start processing my parent’s load (this could be done by calling a stored procedure to load this parent object, or other logical components (notebooks, scripts, etc.) to execute this data flow.

In Data Factory terms, at the end of each loading child’s process, a check is made if all other children are complete too:

Then only after seeing all children being complete, a processing step to load their parents can start and we can update the status of a parent processing in the table row where DataObjectParent = DataObjectChild. We can also have many nesting levels where a parent object can be another parent’s child and the cycle check will keep going.

I didn’t expose the complete Data Factory or SQL code bases described in this blog post, with only a few sample codes and pipeline examples communicated. This was done for a simple reason to start a conversation and share this idea, that a simple “Are We There Yet” approach can be done in Azure Data Factory with small efforts to design generic pipelines, small efforts to define and configure metadata to support this loading process and small efforts for support and further maintenance: a win-win situation!

Comments

Post a Comment